Stateful AI at fortune-500 scale.Secured end-to-end.

Context pairs a three-tier memory stack with enterprise-grade controls, delivering frontier-model reasoning over tens of millions of tokens without compromising data sovereignty.

A deep-dive for CISOs, enterprise architects, and staff engineers. All claims link to primary evidence in our Trust Center.

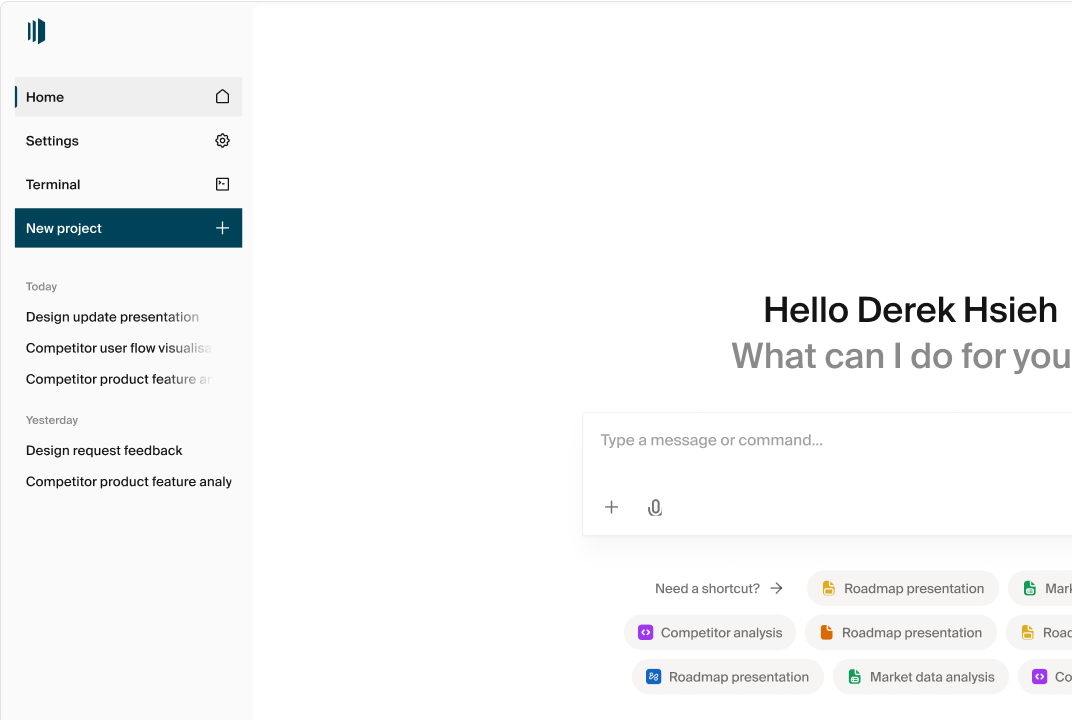

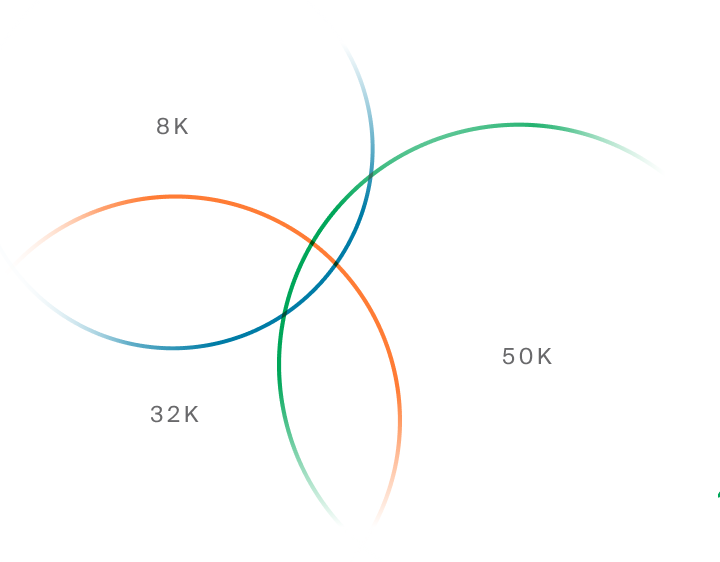

Architecture at a Glance.

Practical ceiling today: ≈ 50M tokens—a cost-latency sweet spot. Scale is unbounded as hardware pricing falls.

Hot

Capacity

≤ 64k tokens

Purpose

Real-time chat & task context.

Key tech

Mix of frontier models

Persistent

Capacity

~ 50 M tokens

Purpose

Stable, large-scale reasoning.

Key tech

Triplet-creation model builds a graph of thoughts—logical relations & intermediate inferences healed continuously.

Cold

Capacity

Unlimited

Purpose

Long-term archive.

Key tech

Traditional RAG over encrypted object store.

Stateful Compute Paradigm.

Inspired by the shift from raw

inference → test-time compute.

01

Structured Thoughts

We store structured “thoughts,” not raw vectors. Each thought is a reusable node in a knowledge graph.

02

Query-Time Reasoning.

At query time, a frontier LLM re-enters this graph, traversing relationships exactly as a pseudo-world model would—eliminating redundant computation and cognitive drift.

03

Linear Scaling

Swarm agents crawl, reflect, and rank results with linear cost scaling, so accuracy persists even at extreme context sizes.

Why it matters?

Context Engine solves critical limitations of traditional AI systems.

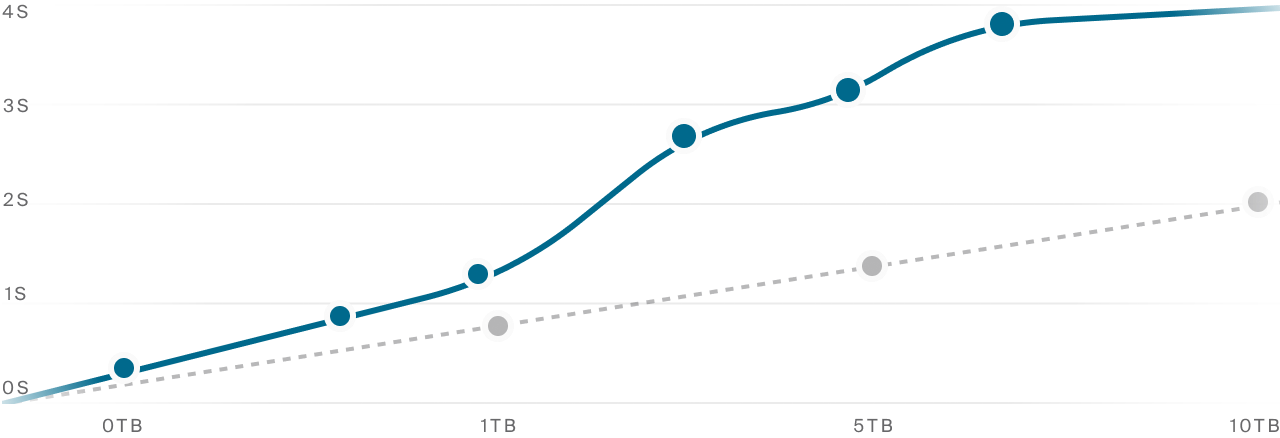

Context Size

Robust reasoning at 50M+ tokens with no quality cliff.

Legacy bottleneck

Short context windows (8k-32k)

Performance

Linear crawl; P95 latency < 2s on 10TB store.

Legacy bottleneck

Latency spikes with corpus size

Semantic Understanding

Graph-of-thoughts captures causality, chronology, and semantic drift.

Legacy bottleneck

Vector-only RAG misses nuance

Performance at enterprise scale.

Built for search, recommender systems, and autonomous agents. Our graph-of-thoughts approach delivers unmatched accuracy.

100%

80%

60%

40%

20%

0%

Context

90.5%

+24% improvement

Context

66.5%

HELMET 128k Benchmark

Performance comparison on long-context reasoning tasks (higher is better).

LOFT 1M Benchmark

Performance on million-token reasoning tasks (higher is better).

GraphRAG Precision

Factual precision on 2TB enterprise corpus (relative comparison).

How Context differ from Microsoft, Google & Notion.

We care about deeper precision, zero tool-switching, and faster adoption.

| Context (AI-native) | Legal AI suites | |

|---|---|---|

| Scale | Graph-of-thoughts → stable at 50 M+ practical tokens. | Reliant on 128 k/1 M window limits. |

| Architecture | Built ground-up around a memory engine & autonomous agents. | 40-year-old file formats with chatbot overlay. |

| Customization | Forward-deployed engineers codify your exact workflows & templates. | Limited to prompt templates. |

| Workflow | Single workspace; agents orchestrate end-to-end deliverables. | User must hop between Docs, Sheets, Slides. |

Security & Compliance.

We care about deeper precision, zero tool-switching, and faster adoption.

Compliance

SOC-2 Type II (Apr 2025); ISO 27001 in flight.

Encryption

AES-256 at rest, TLS 1.3 in transit, envelope-encrypted secrets.

Residency

US, EU, APAC selectable; single-tenant VPC or on-prem.

Access

SAML/SCIM inheritance; field-level ACL mirrored in memory graph.

Audit

Immutable agent-action logs with tamper-proof hash chain.

Privacy

Zero data used for training unless you opt-in under DPA.

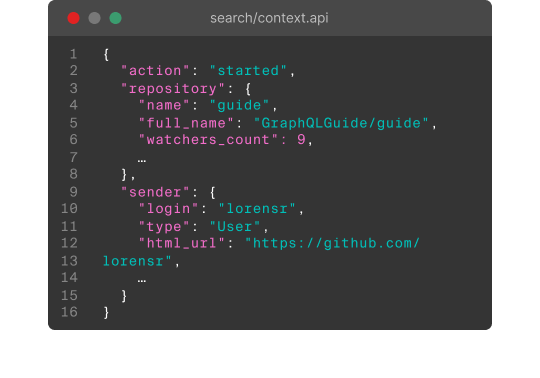

Integration & Extensibility

Pre-built connectors.

GraphQL API & web-hooks

for custom data lakes

and integrations.

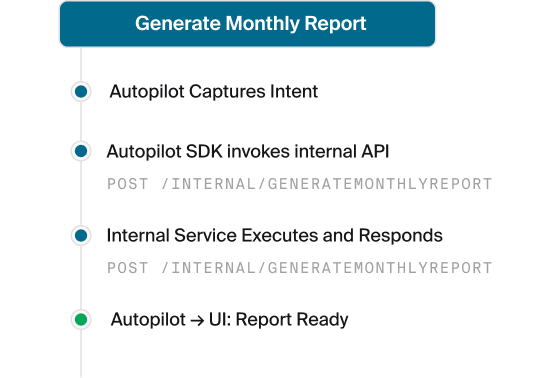

Autopilot SDK.

SDK lets Autopilot invoke internal micro-services

under your IAM.

API Access.

GraphQL API & web-hooks

for custom data lakes

and integrations.

Trust Center and Next Steps.

Trust center

AES-256 at rest, TLS 1.3 in transit, envelope-encrypted secrets.

Patch SLA

Zero-day patch SLA: < 24h critical, < 72h high.

Red-team Program

Invitation-based with cash bounties.

Comprehensive Question and Answers.

Does Context identify key clauses in contracts?

Yes, AI clause detection flags obligations, renewal dates, and risky terms for quick review.

Can Context track regulatory changes that affect our policies?

Absolutely. It monitors trusted sources and alerts you when new rules align with your stored documents.

Is the data inside Context auditable?

Absolutely. Yes, every edit and view is logged, giving you a full chain of custody for compliance audits.

How secure is privileged or client-confidential information?

All data is encrypted, access is role-based, and Context supports on-prem or dedicated cloud deployments for extra control.

Does Context identify key clauses in contracts?

Yes, AI clause detection flags obligations, renewal dates, and risky terms for quick review.

Can Context track regulatory changes that affect our policies?

Absolutely. It monitors trusted sources and alerts you when new rules align with your stored documents.

Is the data inside Context auditable?

Absolutely. Yes, every edit and view is logged, giving you a full chain of custody for compliance audits.

How secure is privileged or client-confidential information?

All data is encrypted, access is role-based, and Context supports on-prem or dedicated cloud deployments for extra control.

Control every obligation at scale.

Centralize contracts, policies, and audit trails under robust permissions to reduce risk and response time.